本教程将介绍从零开始讲解如何搭建kubenetes。教程内容均来源于各自的官方文档,并做了整理和说明,各程序的版本号为截止到2023年9月4日的最新版本,其中版本号为kubenetes v1.28.1。

一、安装k8s

1、安装容器运行时(containerd)

1.1 前置准备

参考教程:Container Runtimes,详细步骤如下:

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

sudo modprobe overlay

sudo modprobe br_netfilter

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

sudo sysctl --system

lsmod | grep br_netfilter

lsmod | grep overlay

sysctl net.bridge.bridge-nf-call-iptables net.bridge.bridge-nf-call-ip6tables net.ipv4.ip_forward

|

1.2 安装containerd

参考官方教程:Getting started with containerd,详细步骤如下:

wget https://ghproxy.com/https://github.com/containerd/containerd/releases/download/v1.7.5/containerd-1.7.5-linux-amd64.tar.gz

tar Cxzvf /usr/local containerd-1.7.5-linux-amd64.tar.gz

wget https://ghproxy.com/https://raw.githubusercontent.com/containerd/containerd/main/containerd.service -O /usr/lib/systemd/system/containerd.service

systemctl daemon-reload && systemctl enable --now containerd

wget https://ghproxy.com/https://github.com/opencontainers/runc/releases/download/v1.1.9/runc.amd64

install -m 755 runc.amd64 /usr/local/sbin/runc

wget https://ghproxy.com/https://github.com/containernetworking/plugins/releases/download/v1.3.0/cni-plugins-linux-amd64-v1.3.0.tgz

mkdir -p /opt/cni/bin

tar Cxzvf /opt/cni/bin cni-plugins-linux-amd64-v1.3.0.tgz

mkdir /etc/containerd

containerd config default > /etc/containerd/config.toml

vi /etc/containerd/config.toml

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc]

...

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options]

SystemdCgroup = true

并且将 sandbox_image = "registry.k8s.io/pause:3.9"

修改为 sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.9"

[plugins."io.containerd.grpc.v1.cri".registry.mirrors]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]

endpoint = ["http://mirrors.ustc.edu.cn"]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."*"]

endpoint = ["http://hub-mirror.c.163.com"]

systemctl restart containerd

[root@stache31 ~]

tcp 0 0 127.0.0.1:36669 0.0.0.0:* LISTEN

|

2、利用kubeadm安装k8s集群

参考官方教程,详细步骤如下:

2.1 前置准备

sudo setenforce 0

sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

systemctl disable --now firewalld

swapoff -a

sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

|

2.2 初始化集群

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://pkgs.k8s.io/core:/stable:/v1.28/rpm/

enabled=1

gpgcheck=1

gpgkey=https://pkgs.k8s.io/core:/stable:/v1.28/rpm/repodata/repomd.xml.key

exclude=kubelet kubeadm kubectl cri-tools kubernetes-cni

EOF

yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

systemctl enable --now kubelet

[root@centos ~]

--kubernetes-version=1.28.1 \

--apiserver-advertise-address=192.168.1.31 \

--image-repository registry.aliyuncs.com/google_containers \

--service-cidr=10.96.0.0/16 \

--pod-network-cidr=10.100.0.0/16

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

crictl config --set runtime-endpoint=unix:///run/containerd/containerd.sock

|

3、安装网络插件

3.1 安装Calico

Kubernetes.io列出的可选的网络插件清单为:安装网络扩展(Addon) ,我们选择 **Calico**,可参考:官方安装指引,详细步骤如下:

注意⚠️⚠️⚠️:网络插件仅在master执行即可

# 1、Install the Tigera Calico operator and CRD

kubectl create -f https://ghproxy.com/https://raw.githubusercontent.com/projectcalico/calico/v3.26.1/manifests/tigera-operator.yaml

# 2、下载自定义配置

wget https://ghproxy.com/https://raw.githubusercontent.com/projectcalico/calico/v3.26.1/manifests/custom-resources.yaml

# 确认下载的custom-resources.yaml文件中,cidr值是否与集群的pod cidr相同.(cidr值:指明 Pod 网络可以使用的 IP 地址段)

#

#

# ipPools:

# - blockSize: 26

# cidr: 192.168.0.0/16

#

kubectl create -f custom-resources.yaml

# 3、确认所有的pod状态均为Running

watch kubectl get pods -n calico-system

|

3.2 加入Worker节点

参考官方文档 加入节点,详细如下:

kubeadm join 192.168.1.31:6443 --token e8nom8.r9od2jsiry2tqwch --discovery-token-ca-cert-hash sha256:6a4cd514e9e0fd968e1dc3506008107fa99b9abc231396c225a7c4acbd8c88ff

[root@stache31 ~]

NAME STATUS ROLES AGE VERSION

server-31 Ready control-plane 17d v1.28.1

server-32 Ready <none> 17d v1.28.1

server-33 Ready <none> 17d v1.28.1

kubectl get pods --all-namespaces

mkdir -p $HOME/.kube

scp root@<control-plane-host>:/etc/kubernetes/admin.conf $HOME/.kube/config

|

4、其他安装

4.1 Helm

安装参考:Installing Helm

wget https://get.helm.sh/helm-v3.12.3-linux-amd64.tar.gz

tar -zxvf helm-v3.12.3-linux-amd64.tar.gz

mv linux-amd64/helm /usr/local/bin/helm

|

4.2 使用Helm安装kubernets dashboard

helm repo add kubernetes-dashboard https://kubernetes.github.io/dashboard/

helm upgrade --install kubernetes-dashboard kubernetes-dashboard/kubernetes-dashboard --create-namespace --namespace kubernetes-dashboard

|

2、暴露端口

kubectl -n kubernetes-dashboard port-forward svc/kubernetes-dashboard 8443:443

https://127.0.0.1:8443

|

3、创建dashboard管理员用户

参考:creating-sample-user

a、创建文件 kubernertes-dashboard-adminuser.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

name: admin-user

namespace: kubernetes-dashboard

annotations:

kubernetes.io/service-account.name: "admin-user"

type: kubernetes.io/service-account-token

|

b、执行

kubectl apply -f kubernertes-dashboard-adminuser.yaml

|

c、获取admin user的token,粘贴到dashboard初始化的登录页面即可。

kubectl get secret admin-user -n kubernetes-dashboard -o jsonpath={".data.token"} | base64 -d

|

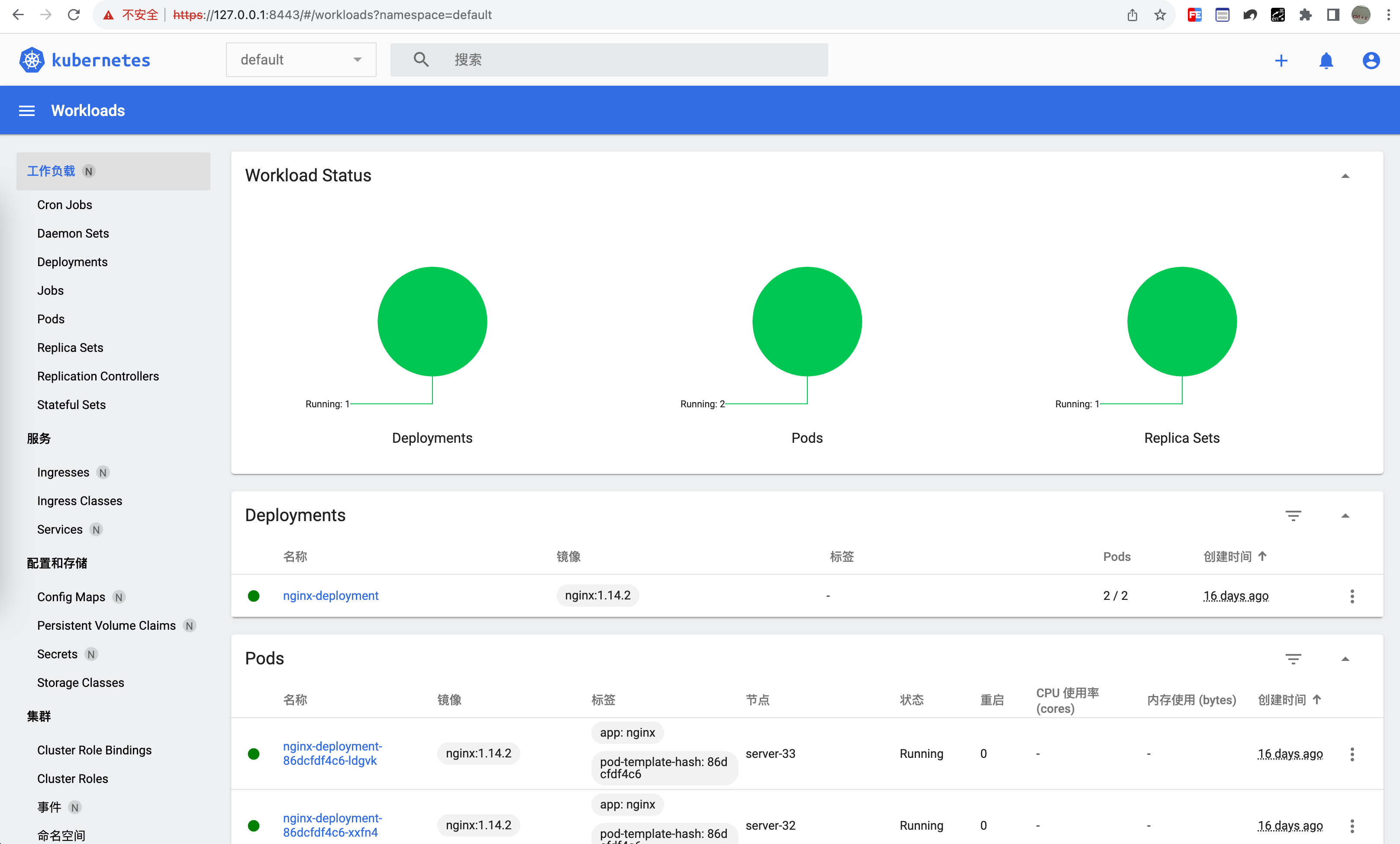

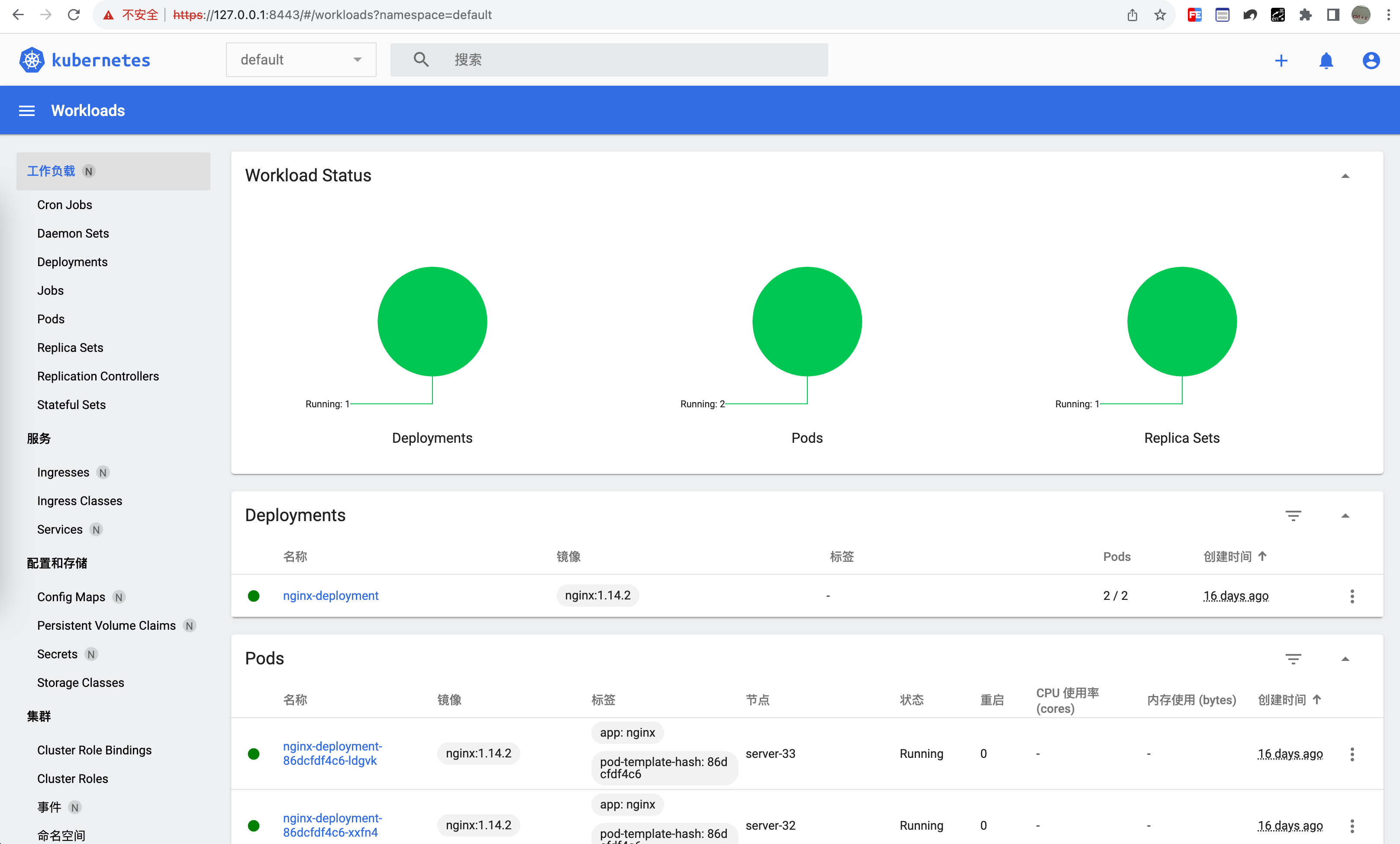

4、dashboard展示